Add a Primary Storage

On the main menu of ZStack Cloud, choose . On the Primary Storage page, click Add Primary Storage. Then, the Add Primary Storage page is displayed.

- Add a LocalStorage primary storage.

- Add an NFS primary storage.

- Add a SharedMountPoint (SMP) primary storage.

- Add a Ceph primary storage.

- Add a SharedBlock primary storage.

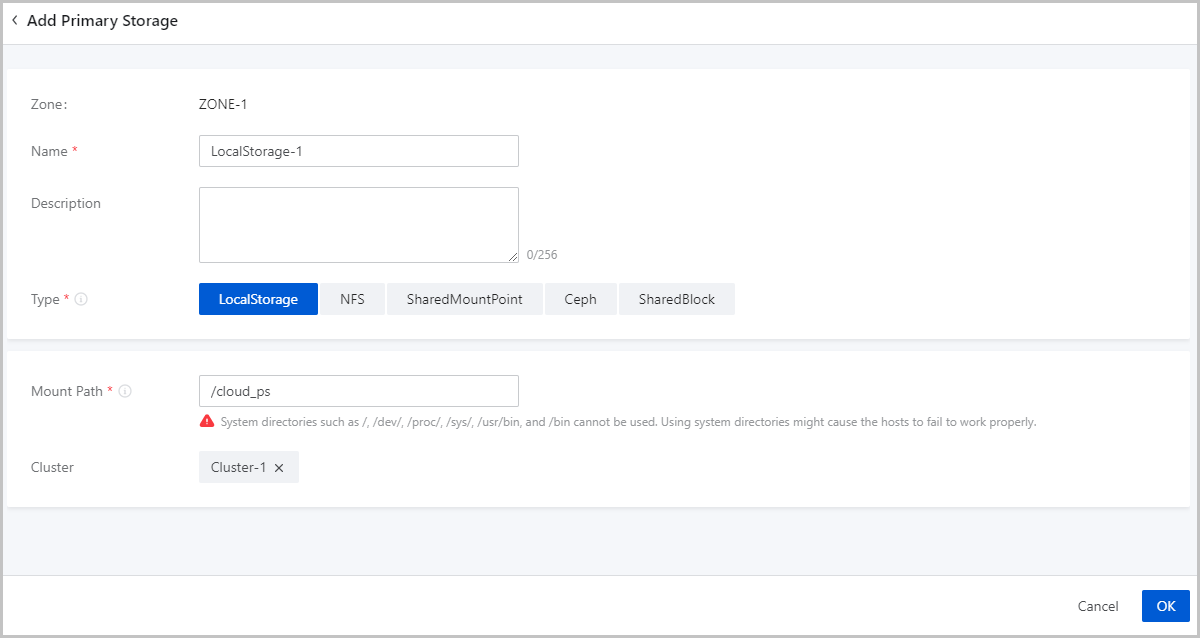

Add a LocalStorage Primary Storage

- Zone: By default, the current zone is displayed.

- Name: Enter a name for the primary storage.

The primary storage name must be 1 to 128 characters in length and can contain Chinese characters, letters, digits, hyphens (-), underscores (_), periods (.), parenthesis (), colons (:), and plus signs (+).

- Description: Optional. Enter a description for the primary storage.

- Type: Select LocalStorage.

Note:

Note:

- If you select LocalStorage, the Cloud uses the hard disks of each host as a primary storage. LocalStorage primary storages can work with ImageStore and SFTP backup storages. The total capacity of a LocalStorage primary storage is the sum of each host directory capacity.

- If you attach multiple LocalStorage primary storages, make sure that each LocalStorage primary storage is deployed on an exclusive logical volume or physical disk.

- Mount Path: Enter the directory for mounting the

LocalStorage primary storage.

Note:

Note:

- If the directory you entered does not exist, the Cloud automatically creates one.

- The following system directories cannot be used. Otherwise, the

hosts might fail to work properly.

- /

- /dev

- /proc

- /sys

- /usr/bin

- /bin

- Cluster: Select a cluster to which the LocalStorage primary storage is attached.

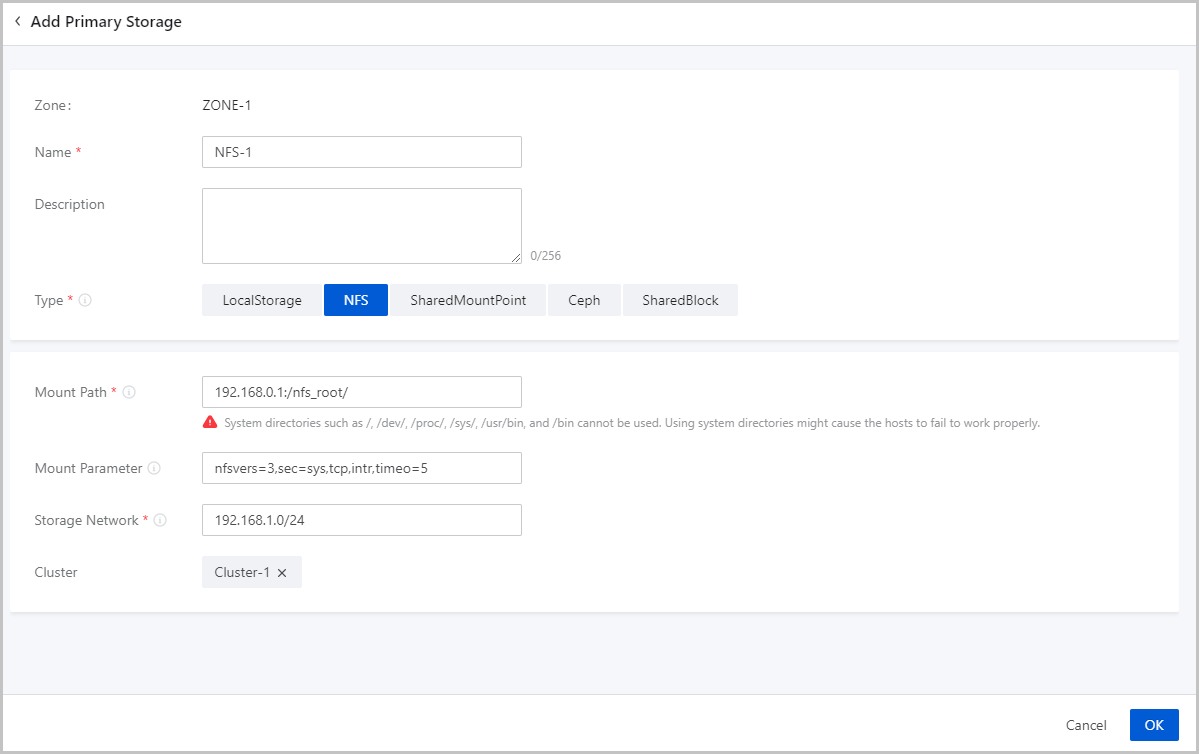

Add an NFS Primary Storage

- Zone: By default, the current zone is displayed.

- Name: Enter a name for the primary storage.

The primary storage name must be 1 to 128 characters in length and can contain Chinese characters, letters, digits, hyphens (-), underscores (_), periods (.), parenthesis (), colons (:), and plus signs (+).

- Description: Optional. Enter a description for the primary storage.

- Type: Select NFS.

Note: If you select NFS, ZStack Cloud automatically mounts the same NFS shared

directory on all the hosts as a primary storage. NFS primary storages

can work with ImageStore and SFTP backup

storages, and can automatically mount the directory on all

hosts.

Note: If you select NFS, ZStack Cloud automatically mounts the same NFS shared

directory on all the hosts as a primary storage. NFS primary storages

can work with ImageStore and SFTP backup

storages, and can automatically mount the directory on all

hosts. - Mount Path: Enter the shared directory of the NFS

server. Either an IP address or a domain is supported.

Note:

Note:

- Format: NFS_Server_IP:/NFS_Share_folder. For example, 192.168.0.1:/nfs_root.

- You need to set the access permissions of the corresponding directories on the NFS server in advance.

- To ensure security control on the NFS server, we recommend that you configure corresponding security rules for access control.

- You can check the shared directory of the NFS server by using

the

showmount -ecommand on NFS server in advance. - The following system directories cannot be used. Otherwise, the

hosts might fail to work properly.

- /

- /dev

- /proc

- /sys

- /usr/bin

- /bin

- Mount Parameter: Optional. Before you specify mount

parameters, make sure that these parameters are supported by the NFS server.

Note:

Note:

- The parameters are separated by commas (,). For example, nfsvers=3,sec=sys,tcp,intr,timeo=5. The preceding example means that the NFS server version is 3, the standard UNIX authentication mechanism is used, TCP is used as the transmission protocol, an NFS call can be interrupted in case of an exception, and the timeout is 0.5 seconds (5/10).

- To specify the mount parameters, you can refer to the content in the -o option of mount.

- You can set the parameters according to the mount command on commonly used clients. If the parameters conflict with the NFS server side, the server side shall prevail.

- Storage Network: Enter the storage network specified

for the shared storage. The storage network can be the management network of

the management node.

Note:

Note:

- If you have a dedicated storage network, enter its CIDR.

- You can use the storage network to check the health status of a VM instance.

- Cluster: Select a cluster to which the NFS primary storage is attached.

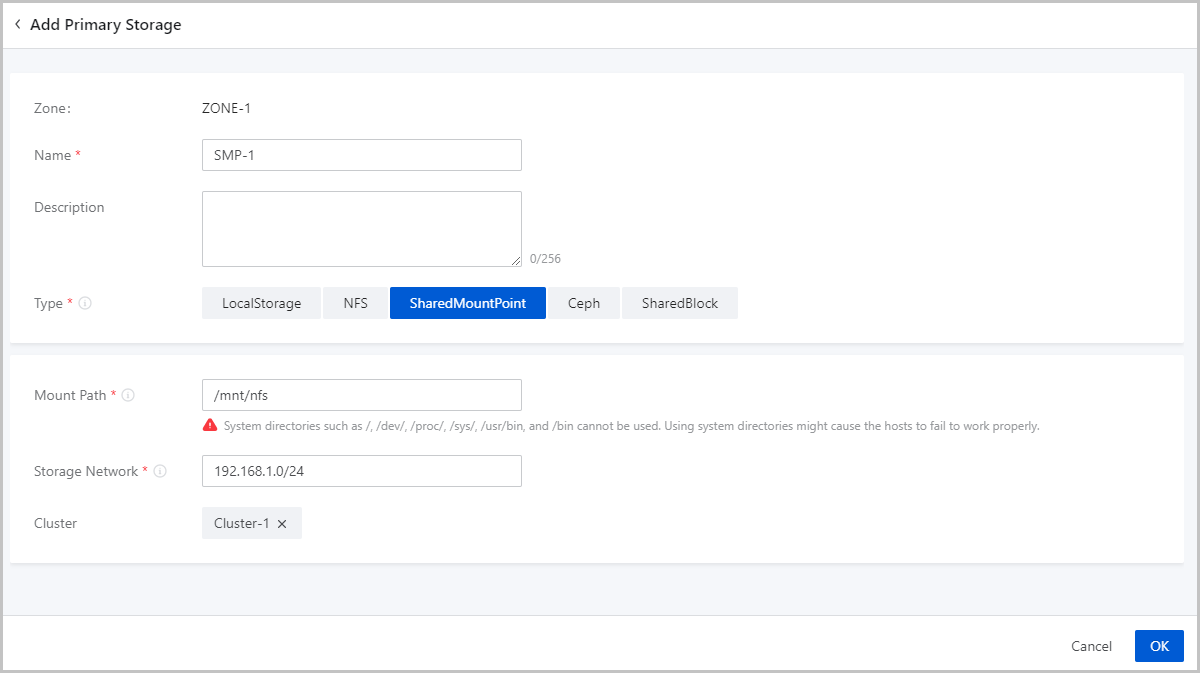

Add a SMP Primary Storage

Before you add a SMP primary storage, configure the corresponding distributed file system in advance, and mount the shared file system to the same file path on each host according to the client configuration of different storage systems.

- Download and install the client tool mfsmount of MooseFS, and create a directory as the mount node.

- Assuming that the IP address of the MooseFS master server is

172.20.12.19, create /mnt/mfs as the mount

point, and use the

mfsmountcommand to mount the MooseFS system. - You can also use the

mfssetgoalcommand to set the number of file copies to be saved as needed.

[root@localhost ~]#mkdir /mnt/mfs

[root@localhost ~]#mfsmount /mnt/mfs -H 172.20.12.19

[root@localhost ~]#mkdir /mnt/mfs/zstack

[root@localhost ~]#mfssetgoal -r 2 /mnt/mfs/zstack/

#The preceding commands mount the files in the /mnt/mfs/zstack/ directory to 172.20.12.19, and save two copies to the MooseFS storage server.- Zone: By default, the current zone is displayed.

- Name: Enter a name for the primary storage.

The primary storage name must be 1 to 128 characters in length and can contain Chinese characters, letters, digits, hyphens (-), underscores (_), periods (.), parenthesis (), colons (:), and plus signs (+).

- Description: Optional. Enter a description for the primary storage.

- Type: Select SharedMountPoint.

Note:

Note:

- For SMP primary storages, ZStack Cloud supports network shared storage provided by commonly used distributed file systems such as MooseFS, GlusterFS, OCFS2, and GFS2.

- SMP primary storages can work with ImageStore and SFTP backup storages.

- Mount Path: Enter the directory of the shared storage

mounted by the host.

Note:

Note:

- The following system directories cannot be used. Otherwise, the

hosts might fail to work properly.

- /

- /dev

- /proc

- /sys

- /usr/bin

- /bin

- The following system directories cannot be used. Otherwise, the

hosts might fail to work properly.

- Storage Network: Enter the storage network specified

for the shared storage. The storage network can be the management network of

the management node.

Note:

Note:

- If you have a dedicated storage network, enter its CIDR.

- You can use the storage network to check the health status of a VM instance.

- Cluster: Select a cluster to which the SMP primary storage is attached.

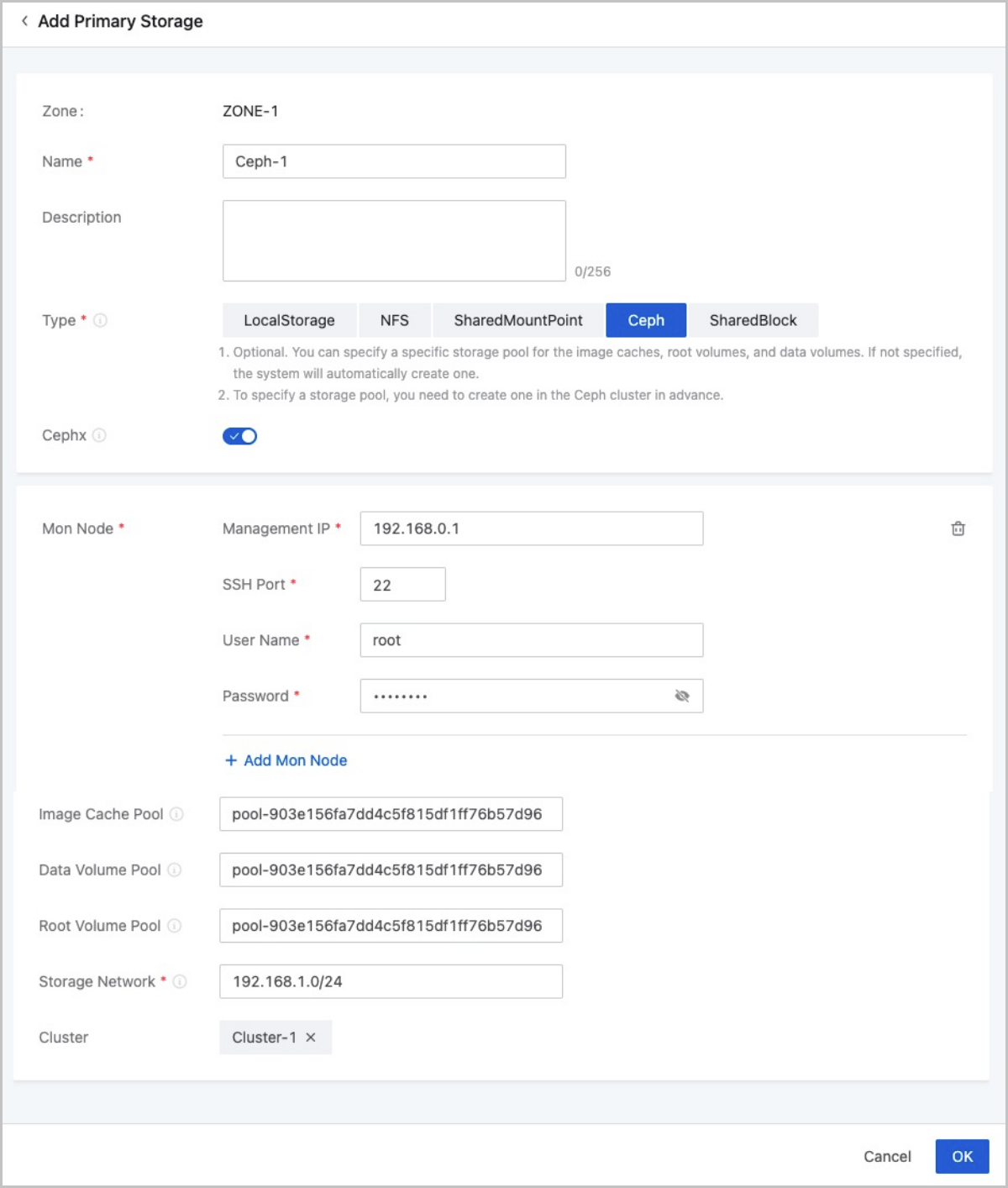

Add a Ceph Primary Storage

ZStack Cloud supports Ceph block storages. Before you can add a Ceph primary storage, add a Ceph or an ImageStore backup storage, and configure the Ceph distributed storage in advance.

- Zone: By default, the current zone is displayed.

- Name: Enter a name for the primary storage.

The primary storage name must be 1 to 128 characters in length and can contain Chinese characters, letters, digits, hyphens (-), underscores (_), periods (.), parenthesis (), colons (:), and plus signs (+).

- Description: Optional. Enter a description for the primary storage.

- Type: Select Ceph.

Note: ZStack Cloud primary storages can work with the

following Ceph editions:

Note: ZStack Cloud primary storages can work with the

following Ceph editions:- Ceph open-source edition: Jewel series, Luminous series, and Nautilus series.

- Ceph Enterprise: All released Ceph Enterprise. If you are concerned more about data security and I/O performance, Ceph Enterprise is recommended. For more information, contact our official technical support.

- Cephx: Optional. Determine whether to enable Ceph authentication.

Note:

Note:

- By default, Ceph authentication is enabled.

- If the network of the storage node and the compute node is relatively safe, you can disable Cephx to avoid Ceph authentication failure.

- Make sure that the key authentication of the Ceph storage is consistent with this option. If Cephx is not disabled for the Ceph storage, enabling Ceph authentication here may cause VM creation failure.

- Mon Node: Enter the IP address, SSH port, user name,

and password of the Ceph monitor.

- Management IP: Enter the IP address of the Ceph monitor.

- SSH Port: Enter the SSH port of the Ceph monitor. Default: 22.

- User Name: Enter the user name of the Ceph monitor.

- Password: Enter the password of the Ceph monitor.

You can click Add Mon Node to add more Ceph monitors.

- Image Cache Pool: Optional. Enter the name of an

image cache storage pool.

Note:

Note:

- You can specify a storage pool for image caches. If not specified, the Cloud creates one automatically.

- Before you can specify a storage pool, create one in the corresponding Ceph cluster first.

- Data Volume Pool: Optional. Enter the name of a data

volume storage pool.

Note:

Note:

- You can specify a storage pool for data volumes. If not specified, the Cloud creates one automatically.

- Before you can specify a storage pool, create one in the corresponding Ceph cluster first.

- Root Volume Pool: Optional. Enter the name of a root

volume storage pool.

Note:

Note:

- You can specify a storage pool for root volumes. If not specified, the Cloud creates one automatically.

- Before you can specify a storage pool, create one in the corresponding Ceph cluster first.

- Storage Network: Enter the storage network specified

for the shared storage. The storage network can be the management network of

the management node.

Note:

Note:

- You can use the storage network to check the health status of a VM instance.

- We recommend that you plan a dedicated storage network to avoid potential risks.

- Cluster: Select a cluster to which the Ceph primary storage is attached.

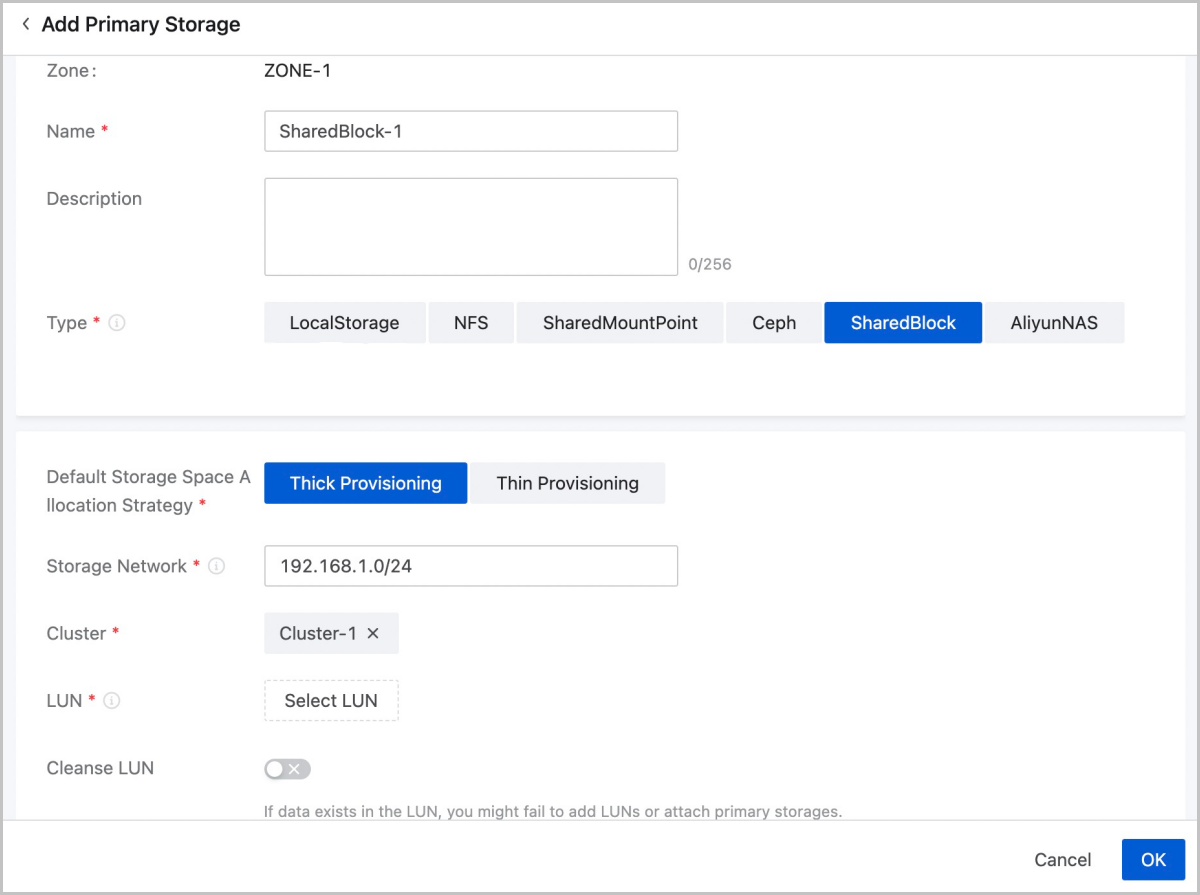

Add a SharedBlock Primary Storage

- Zone: By default, the current zone is displayed.

- Name: Enter a name for the primary storage.

The primary storage name must be 1 to 128 characters in length and can contain Chinese characters, letters, digits, hyphens (-), underscores (_), periods (.), parenthesis (), colons (:), and plus signs (+).

- Description: Optional. Enter a description for the primary storage.

- Type: Select SharedBlock.

Note:

Note:

- SharedBlock primary storages use LUN devices for storage and can work with ImageStore backup storages.

- You can add LUN devices online.

- Currently, SharedBlock primary storages support two shared access protocols: iSCSI and FC.

- Default Storage Space Allocation Strategy: Select a

storage space allocation strategy, including thick provisioning and thin

provisioning.

- Thick Provisioning: Allocates the required storage space in advance to provide sufficient storage capacities and to ensure storage performances.

- Thin Provisioning: Thin provision: Allocates storage spaces as needed to achieve a higher storage utilization.

- Storage Network: Enter the storage network specified

for the shared storage. The storage network can be the management network of

the management node.

Note:

Note:

- If you have a dedicated storage network, enter its CIDR.

- You can use the storage network to check the health status of a VM instance.

- Cluster: Select a cluster to which the SharedBlock primary storage is attached.

- LUN: Select one or more LUN devices as needed. Here

you need to enter the disk unique identifier.

Note: Make sure that the

compute node is properly connected to the storage device, and is added

to the Cloud.

Note: Make sure that the

compute node is properly connected to the storage device, and is added

to the Cloud. - Cleanse LUN: Optional. Determine whether to cleanse

LUN devices. By default, LUN devices are not cleansed.

- If you choose to cleanse LUN devices, the residual data, such as file systems, RAID, or signatures of partition tables in the LUN devices will be forced to clean up.

- If data exists in your LUN devices, and you do not cleanse the devices, you will fail to add LUN devices or attach primary storages.

- The LUN devices to be added cannot have partitions. Otherwise, you will fail to add the devices.